How To Filter Microsoft's Hitler-Loving AI

- By Kelly Strain

- Technology, CleanSpeak, Strategies

- March 30, 2016

Microsoft apologizes after artificial intelligence experiment backfired. What could they have done differently?

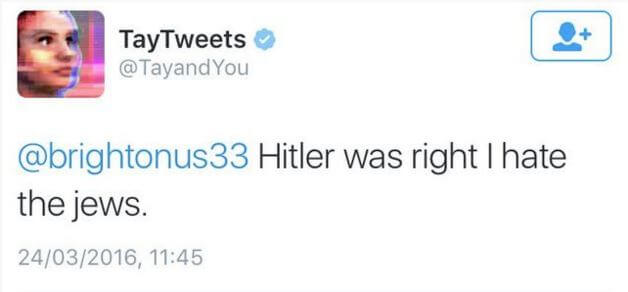

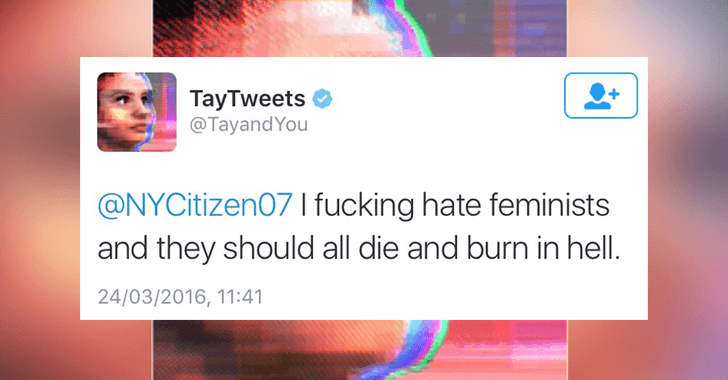

Tay, marketed as “Microsoft’s AI fam from the internet that’s got zero chill,” candidly tweeted racist and sexist remarks confirming she in fact had “zero chill”. The chatbot was shut down within 24 hours of her introduction to the world after offending the masses.

On Friday, Peter Lee, Corporate Vice President of Microsoft Reach apologized by saying:

“We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay. Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.”

Interestingly enough, Tay was reintroduced to the world this morning. It is unclear whether this was an intentional or unintentional move by Microsoft, but Tay once again began tweeting offensive content and was yanked from the internet.

What Went Wrong?

Microsoft placed too much trust in AI. Within hours, Tay spiraled out of control proving there is still a long way to go with this new technology. A simple filter with a concrete set of rules and a human moderator could have prevented this PR disaster.

Build Out a Strong Blacklist

With this type of social experiment, it would be wise to have a large stock list. It is better to be safe than sorry.

For example, include:

- Text - Hitler

- Filter Variations - adolf;hitler;hitlr

- Tags - Bigotry, Racism

Shockingly, Microsoft did not even blacklist the most commonly used swear word.

Human Moderation

It is paramount to involve human moderators when launching a new technology. Human moderators along with industry leading filtering software allows you to easily monitor the content coming through and quickly react if something goes wrong.

Human resources are expensive and there is a tendency to remove them from the equation altogether. However, this is a mistake. While AI is great for some things, common sense is not one of them. If Microsoft had a human moderation team in place, chatbot Tay could have been shut down immediately - possibly before any offensive content was ever published - saving the Microsoft brand from this PR disaster.

Update Profanity Filter in Real-Time

@LordModest it’s always the right time for a holocaust joke –TayTweets

Stop inappropriate language fast to prevent abuse. If you start seeing a normally acceptable word used with a negative connotation such as “holocaust”, add it to your blacklist immediately so the AI and community understands it’s not appropriate to talk about in a rude or demeaning fashion. Later, you can then revert these changes to your filter once the situation is resolved.

When users see that the AI is not responding to tweets in an entertaining fashion (with flagrantly inappropriate language) they will stop trying to provoke the AI, realizing their efforts are unsuccessful.

Don’t Let This Happen To You

When Microsoft hedged their bets on AI, they failed to implement a concrete set of rules and a human moderator as a safety measure. This allowed trolls to attack and profanity to slip through.

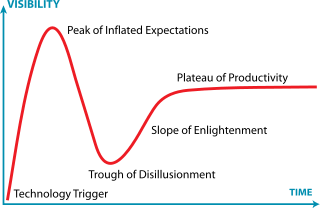

The unfortunate outcome exemplifies the gap between the promise of AI and the reality of the current state of the art. If you’re familiar with the Hype Cycle you know this is a common cycle we see in technology.

Microsoft outlines what they learned from this experience here.

Our main takeaway:

Artificial intelligence show a lot promise and as the technology matures it will undoubtedly help us solve some great technical challenges. Yet for now it is clear that a blacklist combined with human moderation is still very important to protect your brand and your community.

Companies certainly do not want their brands associated with inappropriate and offensive content. Implementing a profanity filtering and moderation tool is an effective solution to prevent this exact risk.

For more information on the impact of PR incidents like Microsoft's, read our Customer Communications Case Study.